Talking research integrity at Stanford

Elisabeth Bik, Ruth O'Hara, and I try to explain how to get out of the mess we're in

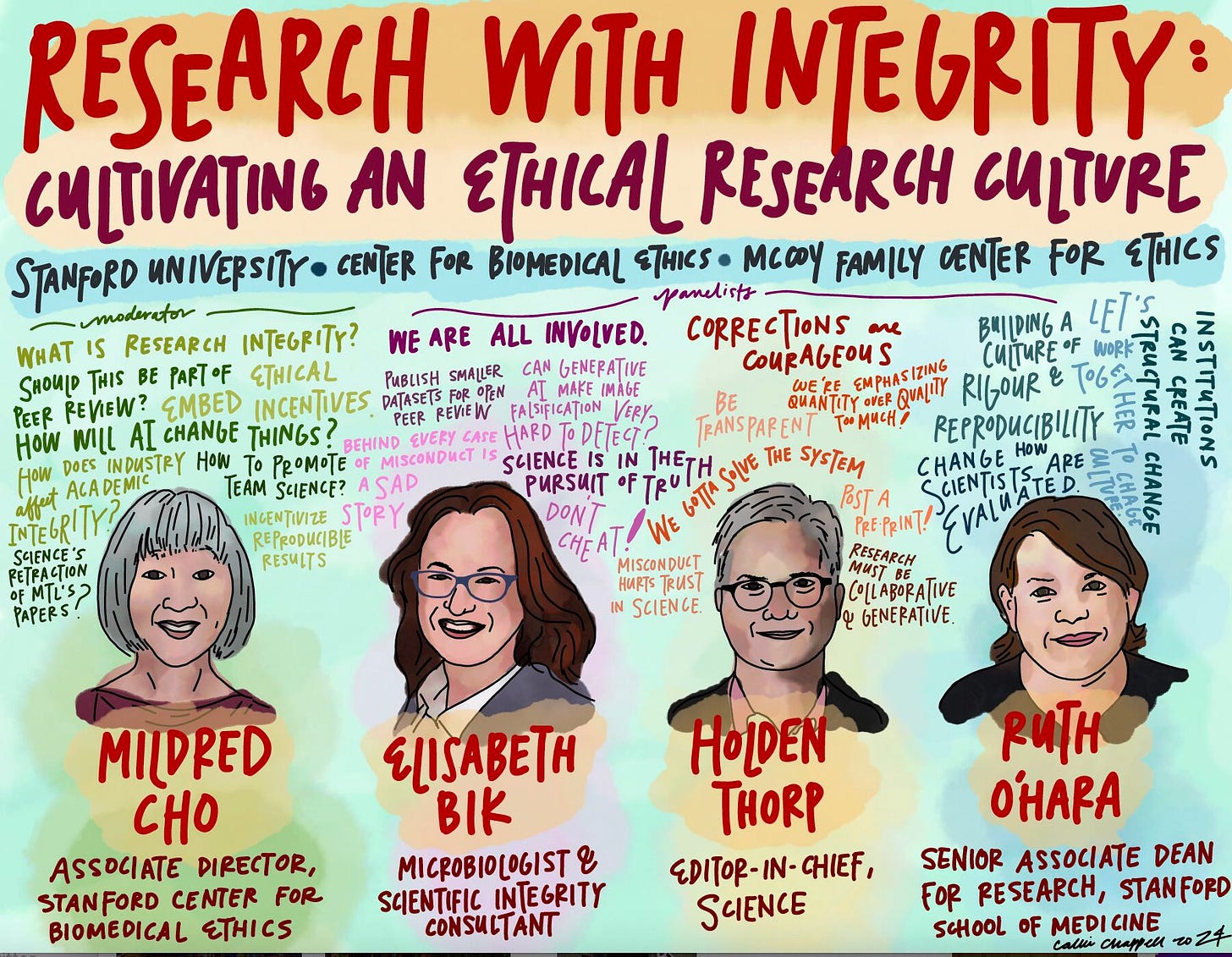

Last month, I was on a panel at Stanford talking about research integrity amidst the continued parade of news of problem papers from Stanford, Rochester, Harvard, Duke, Dana-Farber and more. I had the honor of being on the panel with Elisabeth Bik who has led the charge on finding problematic images in papers. There was a fun cartoon done by Callie Chappell of the event.

The video of the event is now posted:

My introductory remarks are pasted below. There was a lot of great stuff in the questions, and Elisabeth and Ruth had much wisdom under the fine moderation by Mildred Cho.

My shtick about this stuff is that these problems are systemic and not “bad apple” problems where you can blame one person and move on. And the main systemic problem is the temptation to do these things coupled with the reticence of everyone, especially the institutions, to take any responsibility for them. One of the things I said in the Q&A that I liked was that “when you see a falsified paper, that’s just a readout of the lack of humanity in academic science.”

Here are my introductory remarks, but it’s best to watch the whole thing and hear from everyone:

It's really an honor to be on the program with Elisabeth. We talk about Elisabeth a lot because she writes to me, and then we have long meetings about what we're going to do when we hear from her. Not talking about her personally, but the things she shares with me, which I don't always love getting.

But academics are people who should be able to hold two thoughts in their head at the same time, and that is, we may not like hearing from Elisabeth, but what she's doing is making science a lot better. And both of those things are true.

So it's an honor to be here with you and Dr. O'Hara as a former administrator, I know how hard your job is. Thank you for doing it. I'm going to say some things about administration today. And I just want to point out, I don't like administration but I do like administrators. Administrators are human beings mostly trying to do the best they can. And what I'm going to try to persuade you of is a lot of the problems we have in science are systemic. And as long as we're treating them as problems of individual people, we're not going to get to the solution.

So as Elisabeth already pointed out, this is an unbelievable moment for research integrity. But I want to point out that a lot of people, including John Ioannidis who's here and Elisabeth, and lots of people at journals, we've been working on this for a long time. It's only recently that this has been something that's in the news with the frequency that it's at. And it's not going to stop anytime soon.

I don't know if you've noticed, but the Wall Street Journal now has a full time reporter who is excellent Nidhi Subbaraman. And I've spent a lot of time talking to her and this is her whole beat. So for all we know, she's writing up somebody in this room right now. One of those that Elisabeth had in her slides was one that came out since we had our planning call for this talk.

So this moment is not going to end anytime soon. Eventually, people will probably move on to another topic. But for now, this is the thing that is with us. And like I said, a bunch of us have been working on this for a long time. I didn't really know that much about all this until I took this job in 2019.

But just so that you understand where I'm coming from, that was a very nice introduction but it left out one important thing, and that is that I left the University of North Carolina in a scandal. It was a scandal in intercollegiate athletics.

It started when I was in middle school. It's been investigated up and down every way possible. Nobody ever found anything that I did that I should be culpable for, but I presided over the institution's response, which also is two things at the same time. The first is that it was sluggish and contributed to everything that went wrong. The second is we did pretty much what any university would have done in the same situation.

I learned then that it's better not to give evasive answers because I think what happens in this is institutions give evasive answers and that's what prolongs these things. And the longer they're prolonged, the more things that go wrong and the more the journalists have to write about. I mean, the longer these things go on, you're just giving a gift to the journalists because they can just write and write and write about all the little twists and turns.

So the more evasive the institution's answers are, the longer the scandal is going to go. And that's certainly what happened to me.

And so I've tried ever since I had the good fortune to get out alive to do the opposite of that, which is what I'm trying to do on this matter. So we just announced that, as Elisabeth said, there are tools for seeing these image duplications. We're using Proofig. There's another one called ImageTwin. They both have their strengths and weaknesses.

We prefer Proofig when we tested it. We ran it for several months on a couple of our journals last year before we announced it the first of the year that we're doing it for everything. When we ran it on 300 papers, we found a lot of inadvertent errors. And that was great because the authors got a little report back that said, hey, you might not have noticed, but here's this thing in your figure. And if you publish it, Elisabeth or somebody else is going to see this problem. Why don't you change it? And it was an inadvertent error or one that wasn't very significant. They corrected it. That saved all of us a lot of work in the future.

But there were two out of the 300 that we were about to publish — and we're a little family that only has six journals — there were two out of 300 that we were getting ready to publish that we had to withdraw because of the analysis that we did. So that's two out of 300.

We're a small family of journals with only six journals. That's only 3,000 papers per year. So two out of 300, that's close to 1%. We know we don't catch everything with the tools because now that the tools are here, you can just make your manipulations, run it through the tool and see if the tool picks it up.

So the tool is certainly not going to put Elisabeth's eyes out of business.

And let's say we're catching half or something like that, that gets you pretty close to the same percentage that Elisabeth had for the number of fraudulent papers going through. And we're a selective family of journals so a lot of these more open journals that take a lot of things are probably catching even less.

My analysis of that is that, of course, we should try to do more to change the culture, to catch things, to use our tools, to help address a lot of the systemic problems that Elisabeth was talking about. But we're never going to catch everything.

And the only solution to this is to get better at responding when things happen because some years we have five retractions in Science, we have 700 papers per year. People say, aren't you worried about having five retractions? I said, no. The only number of retractions I would be worried about would be zero because I know the answer isn't zero. I know that there's no way with all the human beings involved that we're catching everything. And so if you read my editorials about this, you'll see that my general thing is that we need to just lower the stigma associated with correcting papers, we need to get the institutions to respond more proactively, we need the PIs to understand that correction, as I said in a piece, correction is courageous.

Science is supposed to be a work in progress that's self-correcting. And so we're all capable of overseeing errors that are going to get into the literature. If we correct them quickly without litigation, without media firestorms, without finger pointing, then we have a better chance to get the public to trust that we can do this. And I say that as part of the problem.

The journals have contributed to this problem. We're trying to do better.

And I think we are. We're publishing more editorial expressions of concern. We're publishing retractions faster. We are doing things like giving deadlines to institutions and saying, if we don't hear from you by such and such a date, we're going to do something with this paper.

And we're trying to stress the fact that the journal doesn't care whether it's fraud or whether it's an honest error. Our job is to have a robust scientific record.

So all the stuff about this postdoc did this or I never saw this or the PI's going trying to deflect blame or whatever, we're not interested in all that. We're interested in whether we should correct the paper or not. And if we could get people to accept that correcting the paper is an OK thing to do, we could go ahead and do that. And if the institution wants to argue about who did what, then you all knock yourself out because the people who come to the paper would now know that there's a problem there. And if instead there's an investigation and we can't tell you what's going on because we're protecting confidentiality and all of this stuff, then why would people trust that?

And so to me, the actor that is contributing the most to the problem are the institutions. Again, the administration, not the administrators. The administrators are working under this lumbering bureaucracy that they can barely get out from under. But the institutions need to address a lot of the systemic problems that are causing this: the pressure on the PIs to bring in grants and do a million different things, and they need to do a much better job at giving postdocs and trainees and graduate students a holistic future.

If all the pressure is to get an academic job and you can't get an academic job without these same papers, then all you're doing is first of all, you're depriving somebody of the opportunity to decide what they would like to do with their life. And the second is that you're putting even more pressure on these errors.